Non-Human Identity Lifecycle Management Best Practices

Non-human identities (NHIs) include service accounts, AI agents, bots, automation scripts, machine credentials, API keys, and more; they are ubiquitous in cloud and AI environments. Unlike human identities, NHIs often run unattended, are created programmatically, and can persist invisibly across workloads. They present unique risks, including long-lived credentials, overprovisioned roles, lack of ownership, and insufficient monitoring. Mitigating these risks requires implementing tools and processes to manage NHIs throughout their lifecycle across the five stages illustrated in the diagram below.

The following table provides a high-level summary of the measures that must be implemented at each of these stages.

This article recommends best practices for implementing non-human and agent identity lifecycle management within AWS, Azure, GCP, and hybrid cloud environments. You will learn how to reduce risk by applying controls tailored to the scale, automation, and velocity of NHIs and AI agents.

Summary of best practices for managing the non-human and AI agent identity lifecycle

{{b-list="/inline-cards"}}

Monitor all non-human and AI agent identities

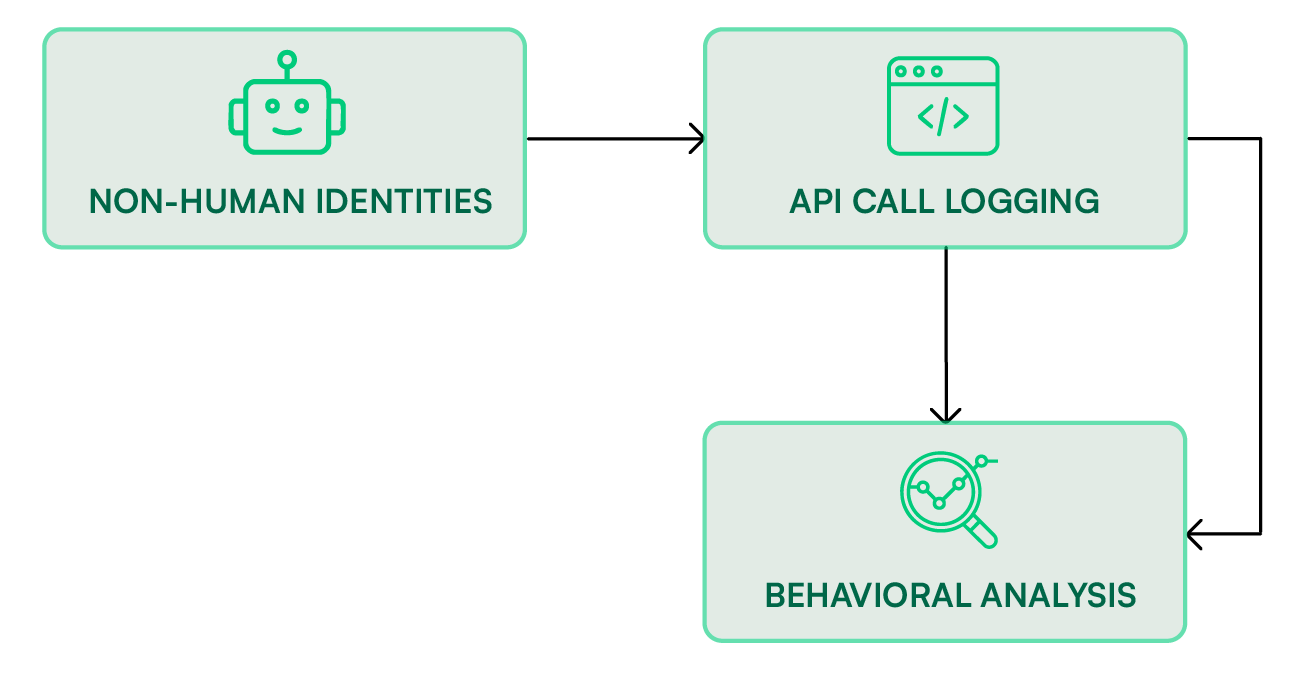

NHIs and AI agents operate continuously without any human login events, performing privileged actions such as provisioning infrastructure, accessing sensitive data, or invoking critical APIs. This makes traditional identity monitoring methods, which rely on session tracking and user logins, ineffective for NHI AI agent oversight. Instead, organizations must shift their visibility focus toward API-level telemetry and behavioral monitoring.

In an ideal NHI and AI agent lifecycle management framework, monitoring serves as the continuous feedback loop that validates whether deployed identities behave as expected. Each NHI—be it a service principal in Azure, a workload identity in GCP, or an application role in AWS—should have its activity observed in real time against established behavioral baselines. This involves collecting and analyzing logs such as AWS CloudTrail, Azure Activity Logs, and GCP Audit Logs to detect deviations in how identities are used.

By mapping API call patterns, timing, and destinations, organizations can identify suspicious deviations early. Automated anomaly detection systems, often enhanced with machine learning, can help distinguish between legitimate workload scaling events and genuine indicators of misuse or compromise.

In 2023, a financial services firm experienced a breach when attackers exploited an Azure service principal, a non-human identity used for automated deployment pipelines. The compromised service principal had been granted excessive permissions to deploy infrastructure across multiple subscriptions. Attackers obtained its secret key from a vulnerable CI/CD system, then used the credential to spin up cryptocurrency mining resources across regions. Because no human login was involved, traditional identity alerts never triggered. The breach was only discovered after cost anomalies and unusual resource creation patterns appeared in Azure Activity Logs.

This incident underscores the unique nature of NHI monitoring. Specifically, visibility must extend beyond authentication events to encompass continuous behavioral analysis. Permissions set for NHIs require specialized governance frameworks that differ from traditional human IAM approaches. While human identities can be managed through time-based access reviews and multi-factor authentication, NHIs need programmatic controls like just-in-time access, automated rotation of credentials, and strict adherence to the principle of least privilege (PoLP).

Implement the principle of least privilege

As mentioned above, applying PoLP to non-human and AI agent identities is one of the most effective ways to reduce the blast radius of any potential compromise. However, it remains one of the hardest practices to enforce. NHIs (e.g., service accounts, workload identities, application roles, or CI/CD credentials) and AI agents are often granted excessive permissions simply to “make things work.” Over time, this operational convenience leads to silent privilege creep, expanding the risk surface across environments.

A well-structured least-privilege model for NHIs and AI agents starts by defining explicit permission boundaries and progressively tightening them as usage patterns are observed. This requires integrating automated policy analysis to continuously evaluate granted permissions against actual activity. Modern cloud platforms already provide mechanisms—such as AWS IAM Access Analyzer, Azure Privileged Identity Management, and GCP IAM Recommender—to identify and revoke unused or overly broad privileges.

The following table describes a number of example scenarios and what both overprivileged and properly refined privilege look like for each.

Enforcing granular permissions and minimizing NHI's scope lets organizations constrain machine-driven actions to only what is operationally necessary. Such granularity limits the damage from compromise and enforces predictable, auditable behavior throughout the NHI lifecycle.

Regularly rotate NHI credentials

Static credentials, such as API keys, service account tokens, or embedded secrets, pose some of the greatest ongoing security risks. Unlike human passwords, which can be reset or expired easily, machine credentials are often designed for continuous automation and thus tend to live far longer than intended. This longevity makes them high-value targets for attackers: A single leaked or unrotated secret can provide persistent access to sensitive systems across cloud environments.

NHIs typically operate without human intervention. An AWS access key or an Azure service principal secret embedded in a build pipeline may silently authenticate thousands of times a day. If these sensitive credentials are ever leaked through a public repository, compromised build server, or third-party dependency, they can be exploited indefinitely unless automatic rotation mechanisms are in place. This creates an asymmetry of risk: Humans forget passwords, but machines never stop using theirs. As a result, organizations must treat credential rotation as an integral, automated stage of the NHI lifecycle rather than a manual maintenance task.

Regular key and credential rotation reduces the exposure window and ensures that even if a secret is compromised, its utility is short-lived. The table below shows how this can work for various credential types.

A practical example of implementing this at scale can be seen via Token Security, an automation platform designed to monitor, detect, and rotate NHI credentials safely. It scans all registered machine identities across environments (like AWS IAM users, Azure service principals, and GCP service accounts) and identifies the credentials nearing expiration or showing signs of inactivity.

Automate onboarding and offboarding

In large-scale cloud environments, orphaned service accounts and leftover machine credentials are among the most persistent and dangerous security gaps. Unlike human users, NHIs and AI agents often remain invisible after a workload is deprecated or an employee leaves the organization, but they still continue to hold valid credentials, secrets, or access keys long after their purpose has ended. Each lingering identity becomes an unmonitored entry point that attackers can exploit.

Effective automation of onboarding and offboarding ensures that non-human AI agent identities are created, used, and destroyed in sync with the workloads they support. This automation isn’t tied to HR processes but to the infrastructure itself and wired directly into provisioning pipelines, infrastructure-as-code (IaC) templates, and CI/CD workflows. When a new application or service is deployed, the corresponding NHI should be automatically created with tightly scoped permissions, appropriate tags, and lifecycle metadata. Conversely, when that workload is terminated, its associated identity, keys, and secrets must be revoked immediately.

Organizations can create a closed lifecycle loop by integrating automation for offboarding with credential rotation workflows. Rotation ensures that active credentials remain short-lived and secure, while automated deprovisioning guarantees that expired or unused ones are fully removed. Together, these processes eliminate credential drift and reduce the risk of “zombie” identities that have been forgotten yet are still active in the background.

{{b-table="/inline-cards"}}

Standardize tagging and classification

As organizations scale, the number of non-human and AI agent identities can easily grow into the thousands, spanning multiple accounts, regions, and cloud providers. Without a clear system for tagging and classification, these identities quickly become opaque and make it difficult to determine who owns them, what purpose they serve, or whether they’re still needed. Effective tagging brings order and visibility to this sprawl, forming the foundation for automated governance and lifecycle management.

This table gives some examples of how tagging can be useful.

Advanced tools, such as the Token Security platform, take tagging and classification a step further. The platform continuously scans cloud environments to pull deep metadata for each NHI, correlating permissions, credential age, and behavioral context. By linking every NHI and AI agent to a verified owner and purpose, it creates a living inventory that enables automated policy enforcement, compliance checks, and rapid response to anomalies.

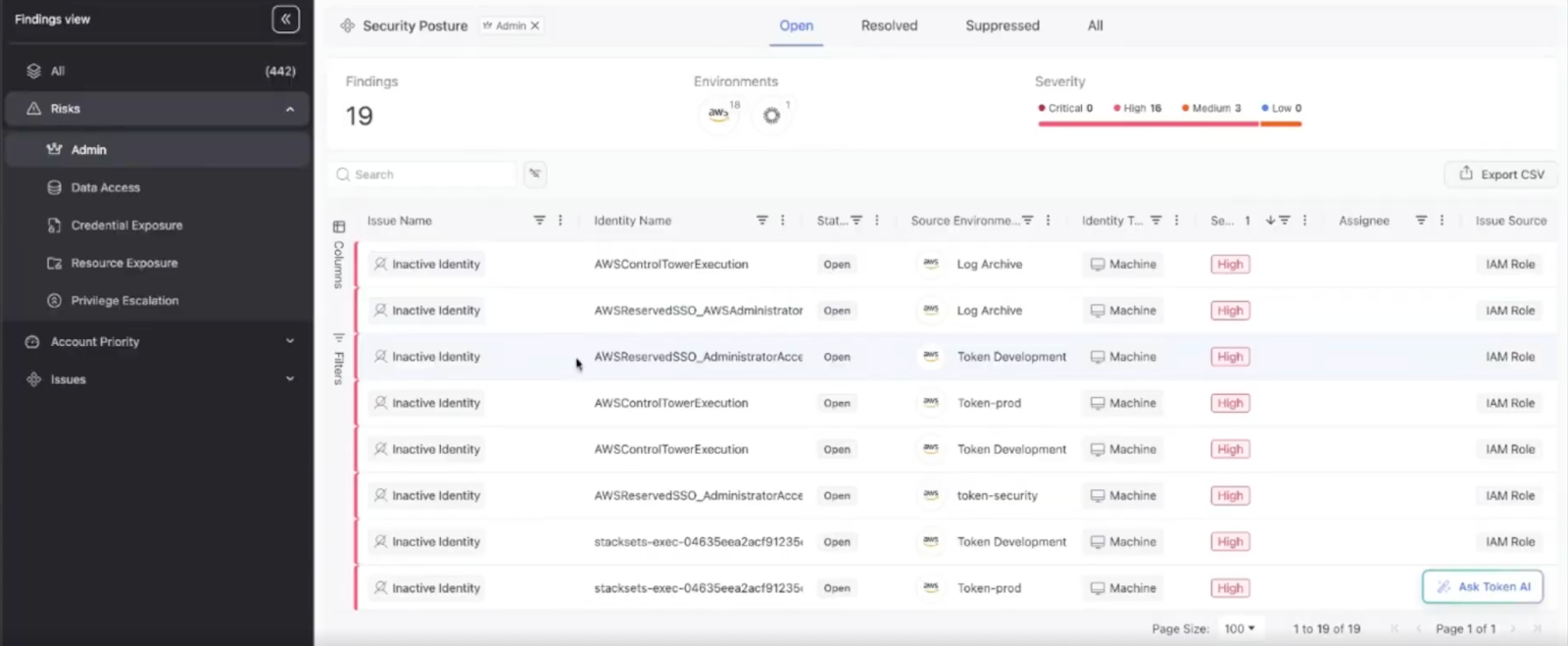

Implement effective posture management

Non-human identities (NHIs) are inherently dynamic and prone to drift as workloads evolve, permissions change, and credentials age. Without continuous posture management, these identities can quickly deviate from intended security baselines and accumulate excessive privileges, outdated configurations, or missing metadata.

Effective posture management relies on automated, policy-driven audits that continuously evaluate each NHI against defined standard security controls and best practices. These audits detect issues such as overprivileged roles, missing tags, unrotated secrets, or expired credentials, ensuring that access and configuration remain aligned with governance expectations.

For example, a security audit in Google Cloud might identify a service account that hasn’t been used in 120 days but still retains Editor permissions across multiple projects. The posture management system flags it as stale and overprivileged, triggering automated remediation by either reducing its permissions or decommissioning it entirely.

{{b-img="/inline-cards"}}

Conclusion

Managing non-human and AI agent identities requires a structured, automated approach across the entire lifecycle, from onboarding and tagging to credential rotation, monitoring, and offboarding. Implementing least privilege, continuous posture management, and consistent metadata practices ensures that NHIs and AI agents remain secure, traceable, and auditable, reducing the risk of compromise while supporting operational efficiency.

Ultimately, treating NHI and AI agent lifecycle management as a first-class security practice is essential: Every machine and AI agent identity must be provisioned, monitored, and retired deliberately to safeguard your cloud environment.

.gif)