Tagging Identities with AI Access: Token Security Finds Shadow AI in Your Enterprise

Shadow AI is real, fast-moving, and hard to find. When AI agents act inside an organization, they will sometimes appear as service accounts, roles, connected apps, non-human users, or maybe not all. That makes them easy to miss with traditional Identity and Access Management (IAM) and security tools. And, this blind spot is where risk hides.

Today we’re launching our Tagging Identities with AI Access feature in the Token Security Platform. It gives you immediate visibility into the identities that are likely being accessed or used by AI agents so you can detect shadow AI, assess risk and manage permissions with confidence.

What Challenges Are Organizations Facing with Shadow AI

Every AI action that enriches context, calls a tool, or takes an active directive in your environment happens through an identity. Identity is a very efficient and effective control plane for AI as every action an agent takes goes through an identity, so it’s the best gate to guard.

It often starts with an innocuous service account: a role created for a short-lived project, a connected app pressed into service by a developer, or an API key tucked into a CI job. To a human reviewer those identities look ordinary. To an AI agent, they are the perfect conduit, a non-human identity with privileges that lets the agent read data, call services, or act on behalf of your infrastructure. That gap between what you can see and what an agent actually uses is where shadow AI lives, and it’s exactly the blind spot Token Security’s new Tagging Identities with AI Access feature was built to close.

How Is Token Security Solving These Challenges

Tagging Identities with AI Access is a core feature in the Token Security platform. Rather than relying solely on brittle keyword scans, this new feature layers semantic name detection with platform-specific logic and usage analysis to flag non-human identities that are likely accessed or used by AI agents. When an identity meets the detection rules, Token Security applies a durable AI_ACCESS tag so security teams can find, investigate, and remediate AI-driven access at scale.

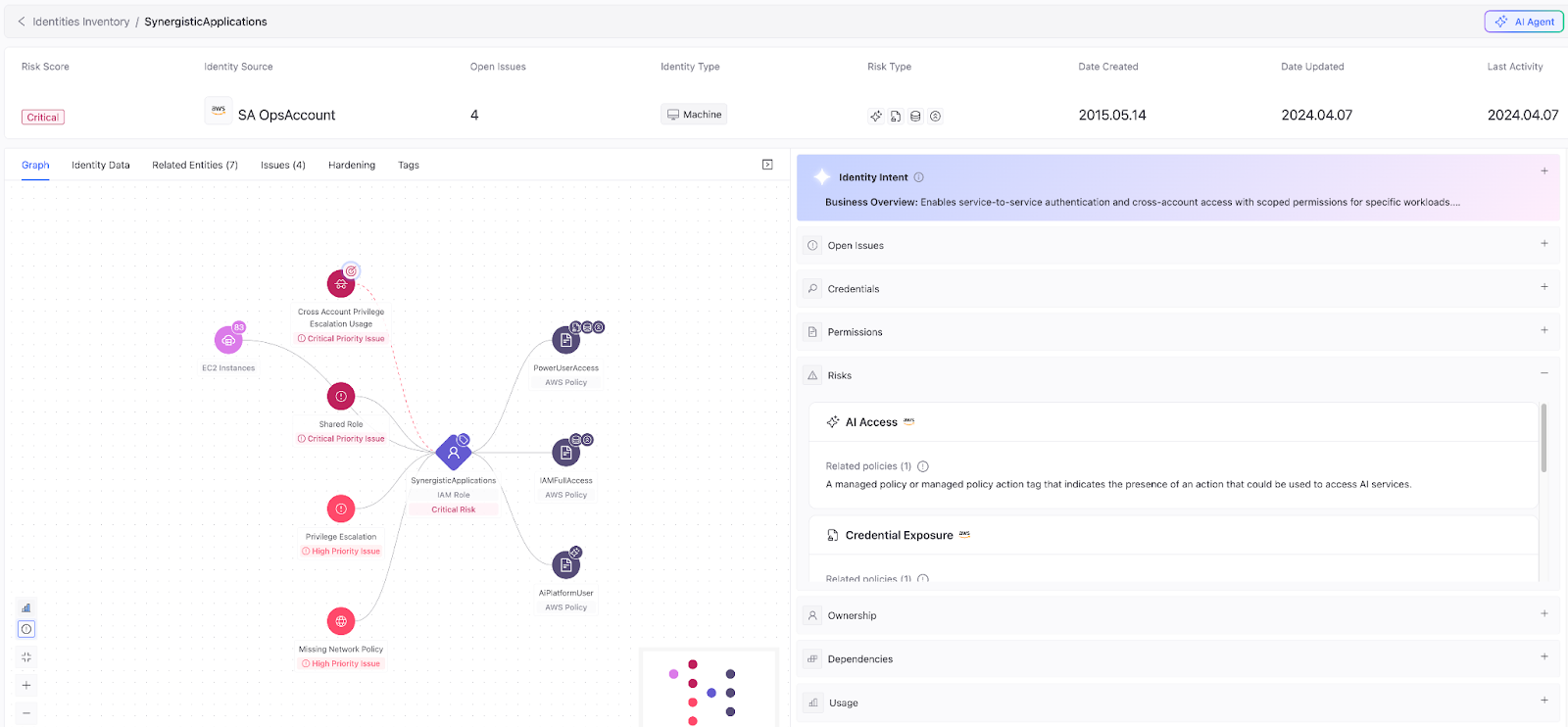

The approach is intentionally pragmatic. A strong example for detection is our analysis of trust policies in AWS. If an IAM role’s trust policy allows it to be assumed by known AI service principals such as Amazon Bedrock or SageMaker, that role gets the AI_ACCESS tag. When the trust policy doesn’t tell the story, name-based heuristics act as a fallback and user/group naming patterns are scanned for AI-related indicators. Another example is Google Cloud. With the powerful toolset offered by Vertex AI, there are many ways for AI Agents to access identities. We analyze jobs, pipelines, instances, and endpoints and tag associated service accounts to mark it as AI-accessible. Those Vertex AI attachments are surfaced as Dependencies in the SIP side panel so you can trace the relationship from identity to AI resource. When attachments aren’t present, descriptive metadata and naming cues provide the fallback signal.

Microsoft Entra ID and Salesforce present different shapes of evidence. For Entra ID, Tagging examines service principals and app registrations for AI-related credentials, names, or configuration hints that suggest programmatic AI consumption. With Salesforce, connected app metadata and OAuth callback configurations reveal cases where an app’s integration points map toward agentic use. We’ve extended similar principles to Okta, Snowflake, and Google Workspace, scanning API key names, non-human user fields, OAuth app metadata, and profile fields, so the tagging works across a customer’s operational footprint.

This layered model matters because it raises confidence without sacrificing coverage. Name-based rules are fast and broad, but noisy. Configuration and attachment signals are higher fidelity and tell you not only that an identity looks like an AI identity, but why. That ‘why’ is what makes a tag actionable: AI_ACCESS includes the reason it was applied (trust policy, Vertex attachment, OAuth callback, and so on), so remediation can be precise.

The result is immediate and practical. Security teams gain rapid visibility into identities that should be treated differently from standard service accounts. They may require heightened monitoring, stricter least-privilege controls, or different lifecycle management. On launch, the feature identified thousands of likely AI identities in customer environments. For example, more than 2,800 were identified at one large enterprise and hundreds at others, demonstrating how quickly shadow AI can accumulate when identities aren’t treated as a first-class signal.

When you see an AI_ACCESS tag, the next steps are familiar to any practitioner: review the tag reason, inspect the attached policy or resource, and apply least-privilege and monitoring controls as appropriate. For the highest assurance, combine the tag with log ingestion and agent inventory correlation. Moving from static configuration analysis to active usage telemetry (sign-in logs, API calls, etc.) lets you detect agents that are actually executing through those identities and understand what tools they call.

What’s Coming Next From Token Security

Tagging Identities with AI Access is a foundational feature. We expect to expand AI platform coverage and to tighten the linking between agent inventories and identity signals so teams can map intent, prompt context, and knowledge bases back to the identities that exercise access. For defenders, the essential shift is clear: protecting AI means finding shadow AI instances and protecting the identities AI agents use. Tagging brings those identities into view, and once they’re visible, you can treat them with the controls and cadence that modern AI-driven systems require.

To see our Tagging Identities with AI Access feature in action, request a demo of the Token Security platform today.

.gif)