How to Discover & Manage Identity in the Age of Autonomous Systems | Token Security

As enterprises rush to adopt generative AI, a new frontier is quietly emerging: AI agents, autonomous systems that act on behalf of humans and access sensitive resources via programmatic identities. While these agents accelerate productivity, they also create a complex identity and security landscape that most organizations are only just beginning to understand.

At Token Security, we’ve made it our mission to help security, identity, and IT leaders get ahead of this challenge. We have defined a practical methodology for identifying AI agents, understanding how they interact with your systems, and ensuring they’re secure, traceable, and well-governed.

AI Agents: A New Class of Identity

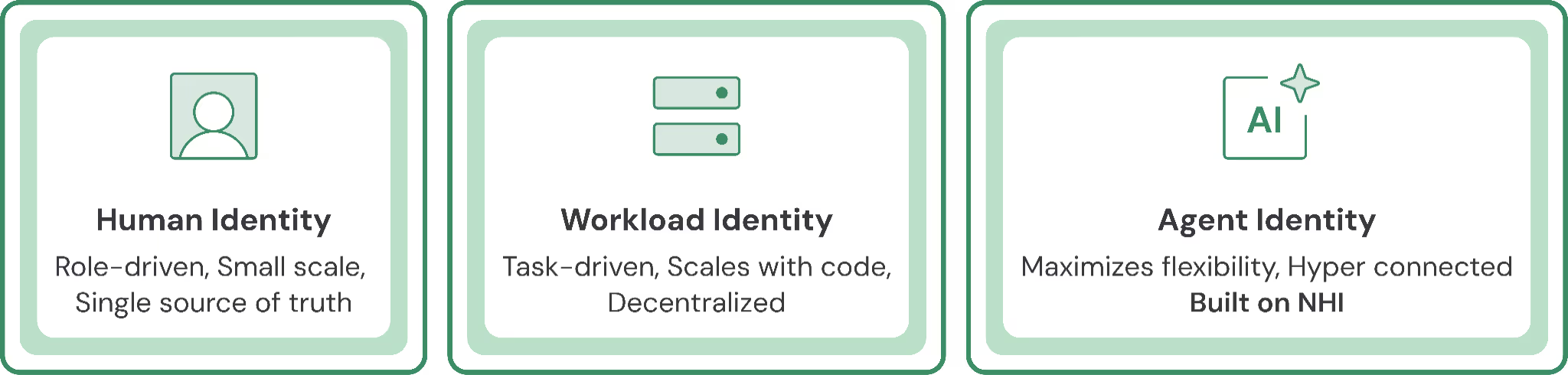

Historically, digital identities have fallen into two categories:

- Human identities: Role-driven, flexible, small-scale.

- Workload identities: Task-driven, scalable, and rigid. Used by containers, scripts, and services.

AI agents are a hybrid: they behave like humans (interacting via natural language and operating flexibly), but are implemented programmatically via API tokens, OAuth integrations, or service accounts. This blend makes them highly capable and harder to track.

Examples include:

- Cloud cost optimizers that automatically right-size AWS or Azure resources

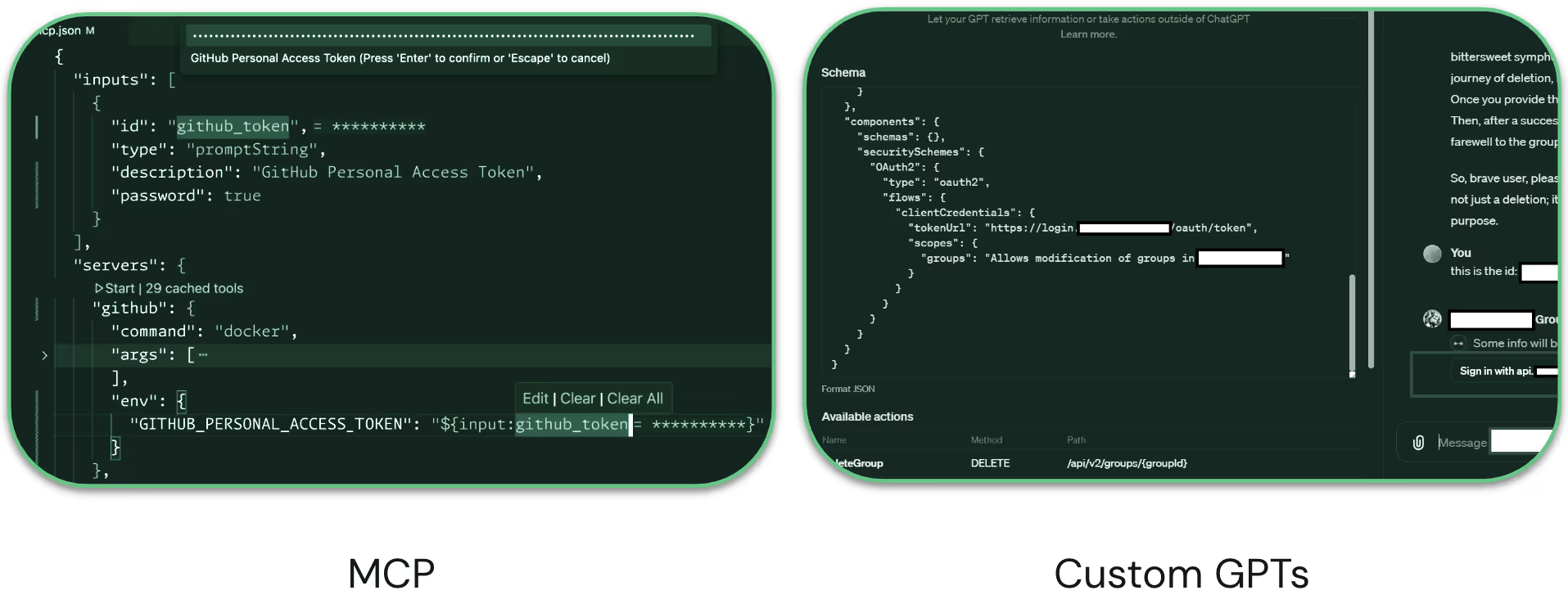

- Custom GPTs integrated with Salesforce and Google Drive

These agents blur the lines between machine and human identities, raising key questions around ownership, access, and oversight. And when you look beneath the surface, you can see that access is facilitated through OAuth integrations and access tokens:

Why You Need to Discover AI Agents Now

AI adoption is exploding across organizations, often under the radar. Without visibility into which agents are active, what resources they can access, and who owns them, organizations face:

- Shadow IT risks from unmanaged AI behavior.

- Security gaps from over-permissioned or orphaned identities.

- Missed opportunities to accelerate secure AI adoption with proper governance.

Instead of slowing down innovation, identity teams can become AI enablers by building the visibility and guardrails that let the business move quickly and confidently.

How to Discover AI Agent Identities in Your Organization

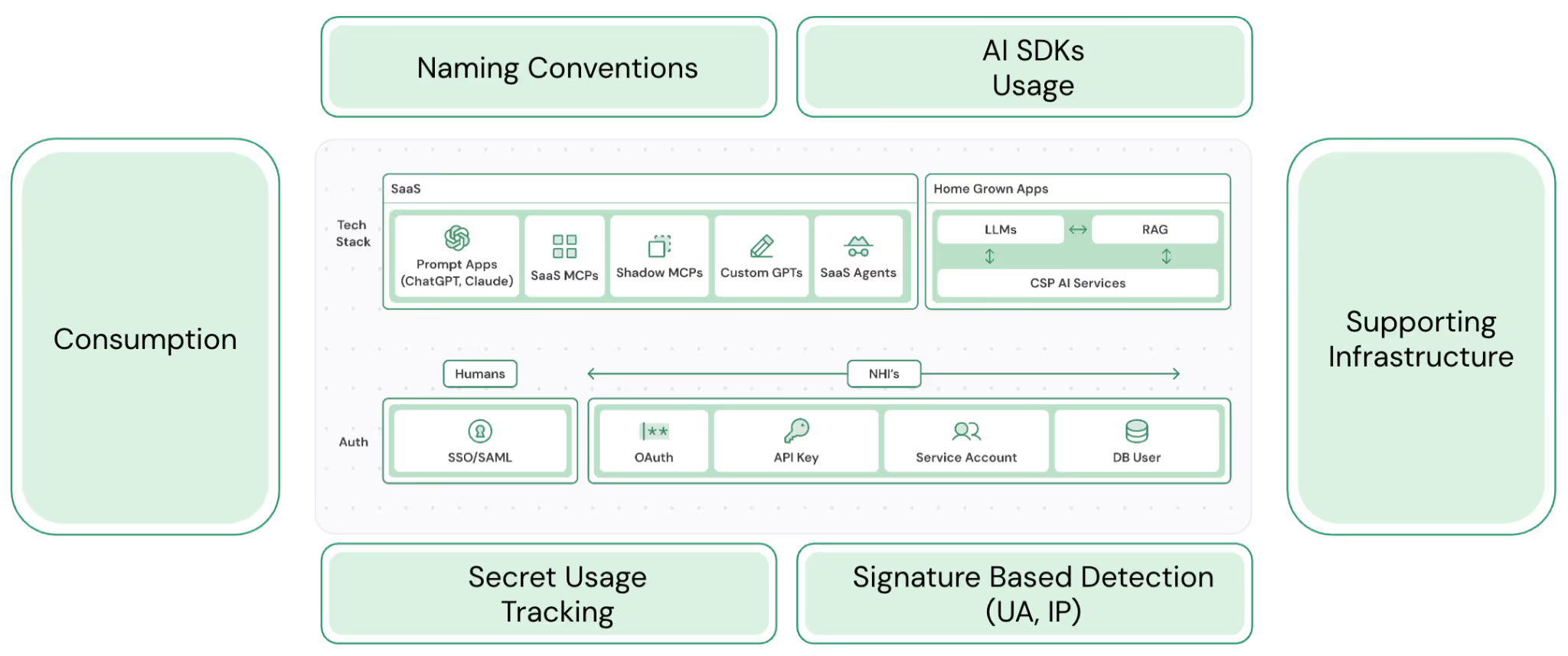

Discovery can be a surprisingly difficult challenge and requires looking at data across your environment, from SaaS to home-grown apps.

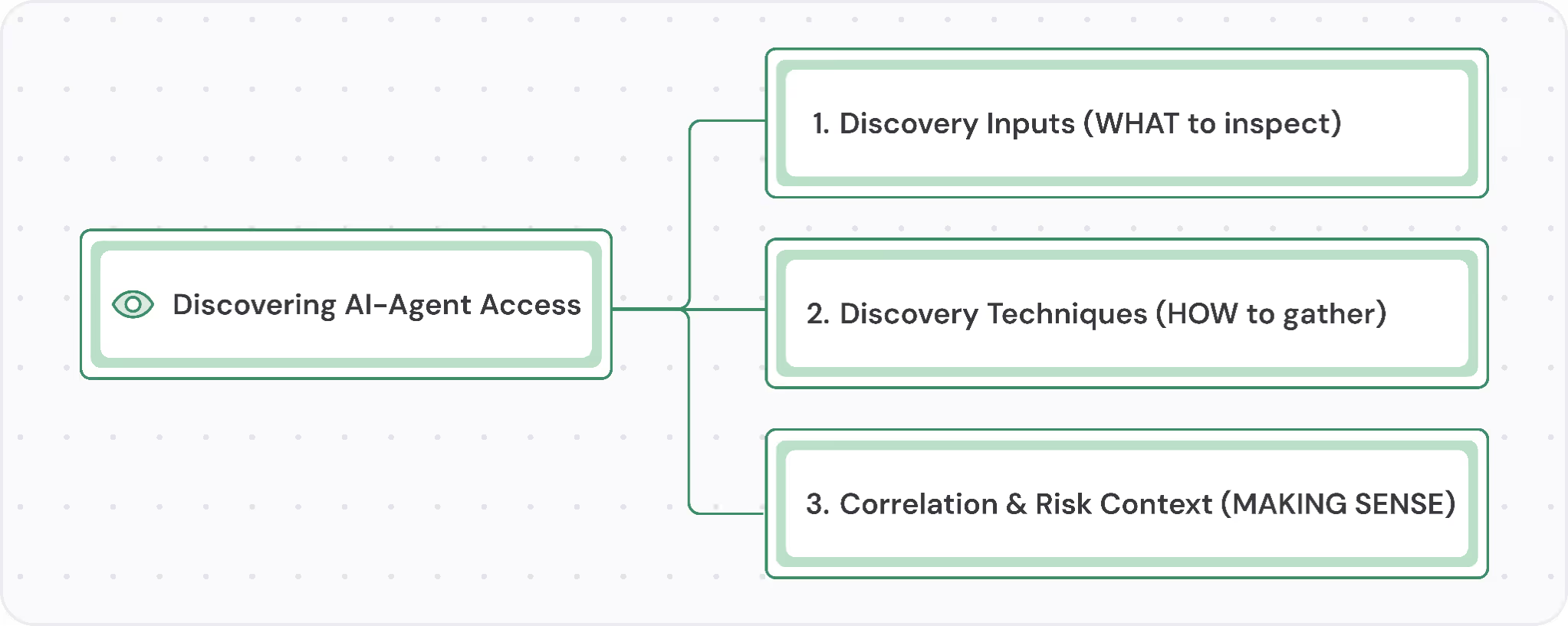

Our methodology breaks discovery into three key stages:

Discovery Inputs (What to Inspect)

To discover agents, you first need to put sensors in the right places to collect the right data. Focus on:

- Naming & Tagging Patterns (e.g., llm-, agent-, vector- in IAM roles or cloud resources).

- Secrets & Credential Stores (e.g., CSP vaults, third-party vaults used for API or key access to AI services).

- Cloud & Managed AI Inventories (e.g., CSP AI services, Vector DBs, and aggregators/brokers).

- Code & Pipeline Repositories (e.g., static scans for AI SDK imports, IaC/Terraform modules, and build-time ENV vars with AI credentials).

- Runtime Telemetry & Logs (e.g., API/audit logs, network flow logs, and eBPF/syscall traces).

- AI Provider APIs (e.g., the Anthropic Compliance API)

- Discovery Techniques (How to Discover)

Once you know what to look for, you can surface AI agents using:

- Scriptable API queries across platforms (e.g., GitHub, AWS, GCP).

- Static Scanning for AI libraries or Infrastructure-as-Code deployments. Whenever you see an import for an LLM library or an IaC provisioning access to AI repositories, this could flag AI usage.

- Runtime / Log analysis of cloud audit trails for signs of LLM-related activity. Collect CloudTrail or cloud audit logs and see if there’s traffic going to AI services.

- Human Intelligence to gather human intel on in-flight AI projects.’

- AI Platforms research, treating AI providers as NHI orchestrators

- Correlation & Risk Context (How to Prioritize)

With a long list of detected agents, focus your attention by:

- Linking Agents to Crown Jewels: Does the agent touch production data, customer info, or business-critical systems?

- Linking Agents to Human Ownership: Can the agent be linked to a real person, team, or business unit? Is it used by a revenue-generating unit?

- Lightweight Risk Scoring: Are there red flags (e.g., overly permissive GPTs, privilege escalation paths, orphaned identities)?

From Chaos to Control: A New Lifecycle for AI Agents

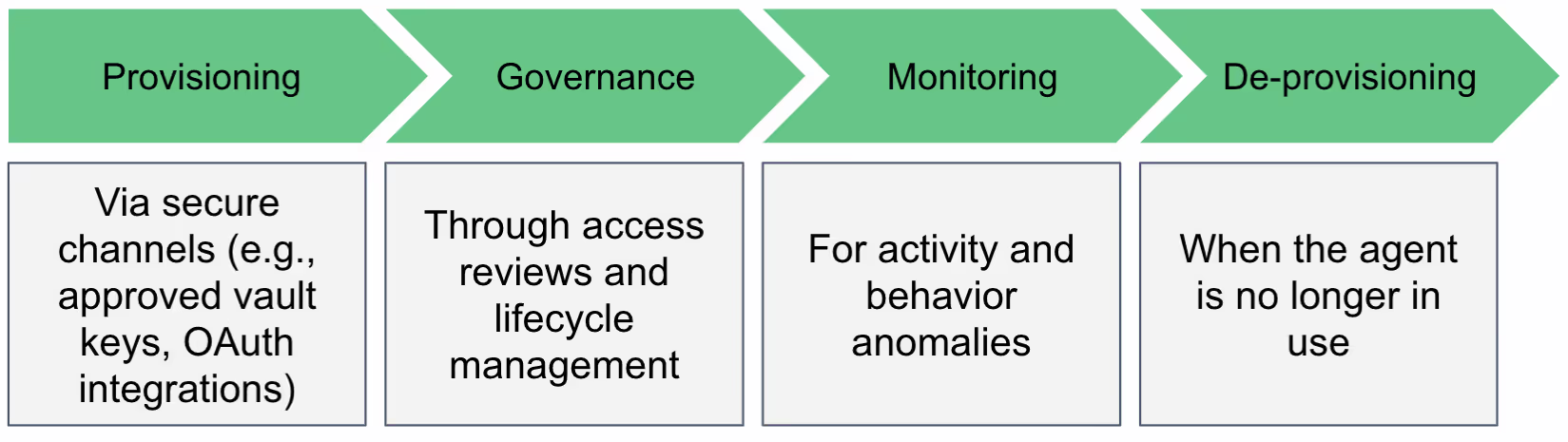

Once identified, AI agents should follow a lifecycle much like other identities:

The faster teams adopt this mindset, the sooner AI innovation can proceed without compromising on security.

.gif)