Securing Agentic AI: Why Everything Starts with Identity

The Shift That Changed the Conversation

For decades, enterprise security was about drawing lines around systems, enforcing access controls, and making sure every identity, whether human or machine, had the right level of privilege. Then came SaaS, which brought in a tidal wave of shadow apps, and cloud, which shattered the neat boundaries of on-prem IT. And now, we are entering a new frontier that is just as disruptive to how we approach security: agentic AI.

In recent focus group discussions we conducted with enterprise CISOs, one theme rose above the noise: AI agents are different. Not simply because they are smarter scripts or fancy workflows, but because they blur the very boundaries of identity. They can reason, they can invoke other agents, and they can persist or disappear without warning.

CISOs know that every boardroom is already buzzing with AI initiatives. From marketing teams deploying copilots, to developers leaning on coding assistants, to business units experimenting with autonomous decision-making, the wave has already hit. The security challenge is no longer hypothetical. It is here today.

Why Identity Is the Linchpin

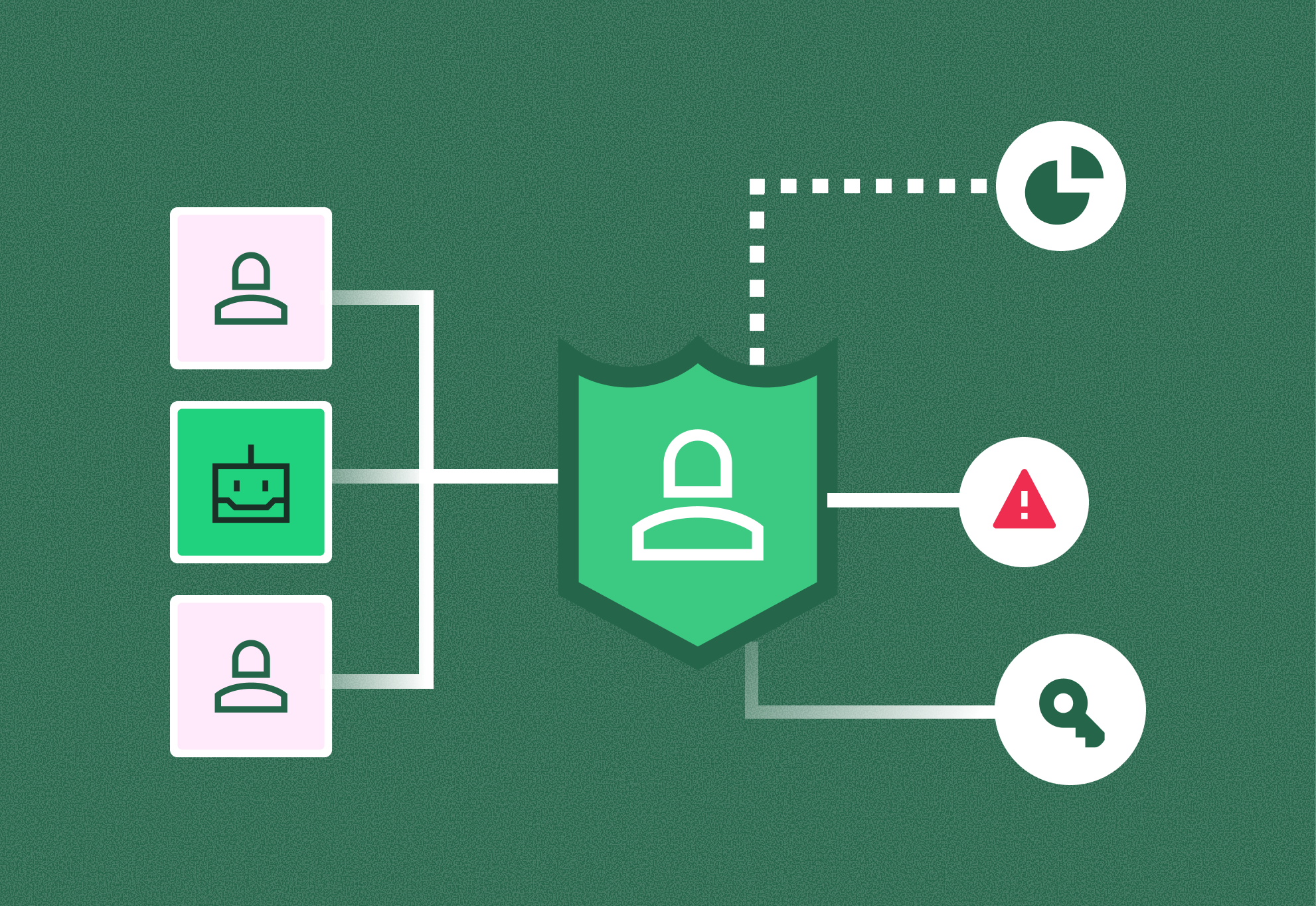

If you peel back the layers of AI adoption, identity consistently emerges as the linchpin. Every agent needs credentials to act. Every action must be performed on behalf of someone. Every piece of data an agent touches creates questions of entitlement such as should this agent have seen this? Should it have done that?

In traditional systems, non-human identities like service accounts or API keys are deterministic. Their behavior is predictable. You know what they do, and you scope their permissions accordingly. But agents are not deterministic. They are goal-driven, and in pursuing those goals, they may take unpredictable paths.

Treating AI agents as just another service account is dangerously misleading. Without deliberate identity governance, agents can quickly become over-privileged actors in your environment, with too much autonomy and too little traceability.

The Multi-Agent Problem

CISOs in our focus group highlighted one of the thorniest identity challenges: multi-agent architectures. When an agent calls another, which may then call a third, the original user’s identity often gets lost, making it impossible to enforce least privileges, understand risk, and maintain compliance.

Imagine a salesperson asks an AI assistant to prepare a customer briefing. That request might flow through an orchestrator agent, which invokes a data-retrieval agent, which then invokes a summarization agent. At the end of the chain, data is pulled from sensitive systems. But was the original salesperson entitled to see that data? Does the downstream agent even know?

This loss of context is more than a technical quirk. It’s an identity breakdown. Without mechanisms to propagate the originating user’s entitlements through every step, enterprises risk giving agents broad, standing access that far exceeds what is appropriate.

Agent Lifecycle and Accountability

Another recurring theme was agent lifecycle neglect. Agents are spun up quickly, often for experimentation. A developer tests a workflow. A security team automates a triage process. A business unit creates a customer-facing assistant. But few organizations have defined offboarding processes for agents.

This results in zombie agents that continue to exist, holding permissions, consuming resources, and creating attack surfaces long after their usefulness has passed.

Accountability compounds the problem. Every human account has an owner. Who owns an agent? Who is responsible when it misbehaves or accesses data it shouldn’t? In the absence of clarity, accountability dissolves.

Business and Compliance Risks

The technical challenges are real, but the business and compliance risks are what keep CISOs up at night. Regulators are already circling. The EU AI Act, ISO 42001, and evolving industry standards all point toward a future where companies must prove that AI systems are controlled, auditable, and compliant. Auditors will not be satisfied with vague assurances. They will want evidence:

- An inventory of agents

- Records of what they accessed

- Proof that permissions were limited to what was necessary

- Documentation of ownership and accountability

Failure to provide this evidence isn’t just a compliance headache; it’s a trust issue. Customers want to know that when they interact with an AI assistant, their data is safe. A single misstep could erode years of hard-earned brand credibility.

Where CISOs Must Start

The message from enterprise security leaders is clear. The time to start creating an agentic AI security plan is now. The longer you wait, the harder it will be to rein in agent adoption. A practical starting point includes:

- Discover and map: Identify every agent in the environment. Which systems do they touch? Which credentials do they use? Without visibility, governance is impossible.

- Enforce Least Privilege: Instead of granting blanket access just in case, scope agents to the narrowest set of entitlements aligned with their intent. And, in multi-agent architectures when agents create other agents, they should not automatically have the same permissions as the parent agent. Automate privilege reviews to ensure they don’t drift into pseudo-admin status.

- Bind Identity Across Chains: Develop mechanisms to carry user identity and entitlements through multi-agent workflows. Ensure every downstream action can be tied back to the original requestor.

- Establish Ownership: Require that every agent has a named owner responsible for its purpose, permissions, and retirement. Ownership is the foundation of accountability.

- Conduct Access Reviews: Periodic agent access reviews to attest the agents people still have ownership over are still required and managed appropriately.

- Plan for Audits: Assume regulators and auditors will ask tough questions. Begin capturing evidence of agent activity today, not tomorrow.

Start with Identity as the Foundation

Identity has always been the backbone of enterprise security. In the era of agentic AI, it becomes the foundation. If identity governance fails, every other control, such as data protection, compliance, and even business trust, will fail with it.

The challenge is urgent. But, CISOs don’t need to reinvent the wheel. The fundamentals of identity and access management still apply; they just need to be adapted, extended, and enforced with the realities of agentic AI in mind.

AI is rewriting the rules of technology adoption at breakneck speed. To keep pace, CISOs must rewrite the rules of identity governance. The organizations that move now will be the ones that avoid tomorrow’s headlines and preserve tomorrow’s trust.

Call to Action: 5 Questions Every CISO Should Ask About Agent Identity

- Do we know how many AI agents currently exist in our environment?

If you don’t have a discovery process, you don’t have visibility. - Who owns each agent?

Ownership drives accountability. An unowned agent is an unmanaged risk. - Can we trace an agent’s action back to the originating human requestor?

If not, your multi-agent workflows may already be breaking identity context. - Are any of our agents “over-privileged” with broad or standing access?

Least privilege must apply to agents just as strictly as humans. - Could we produce audit-ready evidence tomorrow if regulators asked?

If the answer is no, the time to build logging, lineage, and reporting is now.

CISOs should use these questions to start the conversation with their teams to set the stage for securing the entire AI ecosystem. Token Security provides a purpose-built solution for securing agentic AI by providing visibility, control, and governance. To learn more, schedule a demo of the Token Security Platform.

.gif)

%201.png)